Surface Analysis

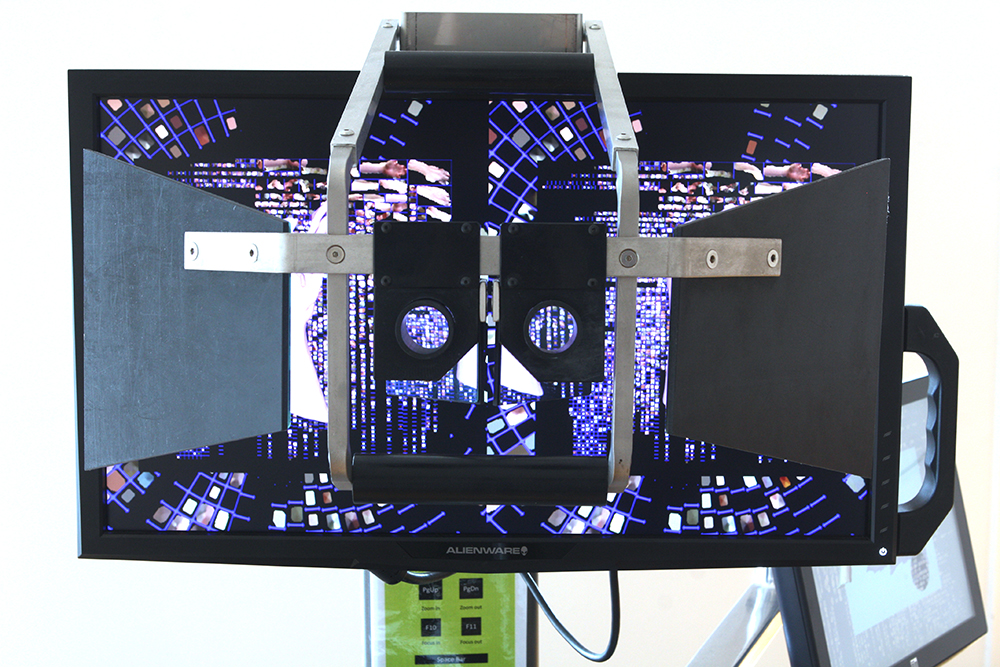

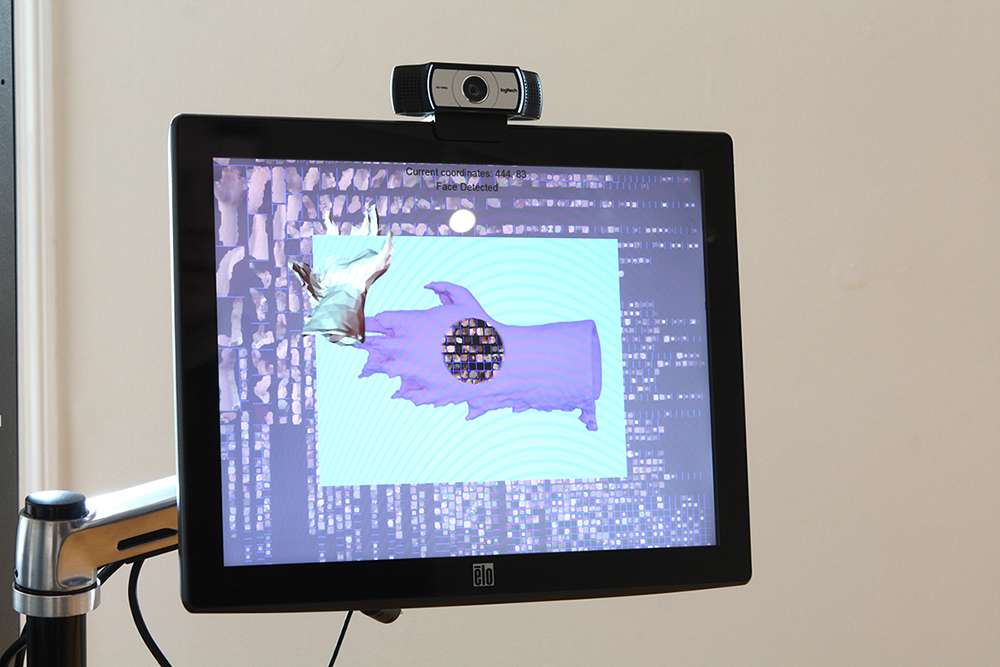

NeuroTouch simulator, 3D animation, facial recognition software, p5.js, 3D print

2019

Dimensions variable

Surface Analysis was part of my residency capstone and solo show The Practical Applications of Networks to the Body at the International Museum of Surgical Science.

For a more in depth description about the references/inspiration/research surrounding the work in the show and my residency, see the blog post I wrote for the museum here :)

The NeuroTouch simulator is a neurosurgery trainer that's part of the museum's collection and uses a stereoscopic viewer and haptic feedback to perform virtual surgery in a gamified 3D space. I was interested in using this setup for it's formal presence and, because a computer runs it, for it's immediate availability to be a part of a local area network (LAN). The animation that's part of the piece Surface Analysis uses the NeuroTouch's stereoscopic viewer and features the 3D scan of a hand used throughout the show rotating and squirming, as it's being analyzed and "scanned" into the local network. Recorded visitor touch data from the piece Touch Analysis is fed into the second screen, and second part of Surface Analysis, on the NeuroTouch station. On this screen, a website is connected to the LAN of the piece Server_001: 192.168.0.100 and displays an animated 3D model of the hand. The movement of the hand across the screen is driven by the algorithm randomly choosing from the cache of saved touches from visitors. The screen displays the touch coordinates that are currently being used to move the hand around. Furthermore, a webcam is scanning for faces, and when it detects one, the visitor can rotate the hand by moving their body (face) back and forth. The visitor's body is implicated in different ways within the network.

You can visit the website from Surface Analysis here. Facial recognition will work better with adequate lighting.